HUMAN CENTERED DESIGN | TESTING

A-B-n Testing

60Min+

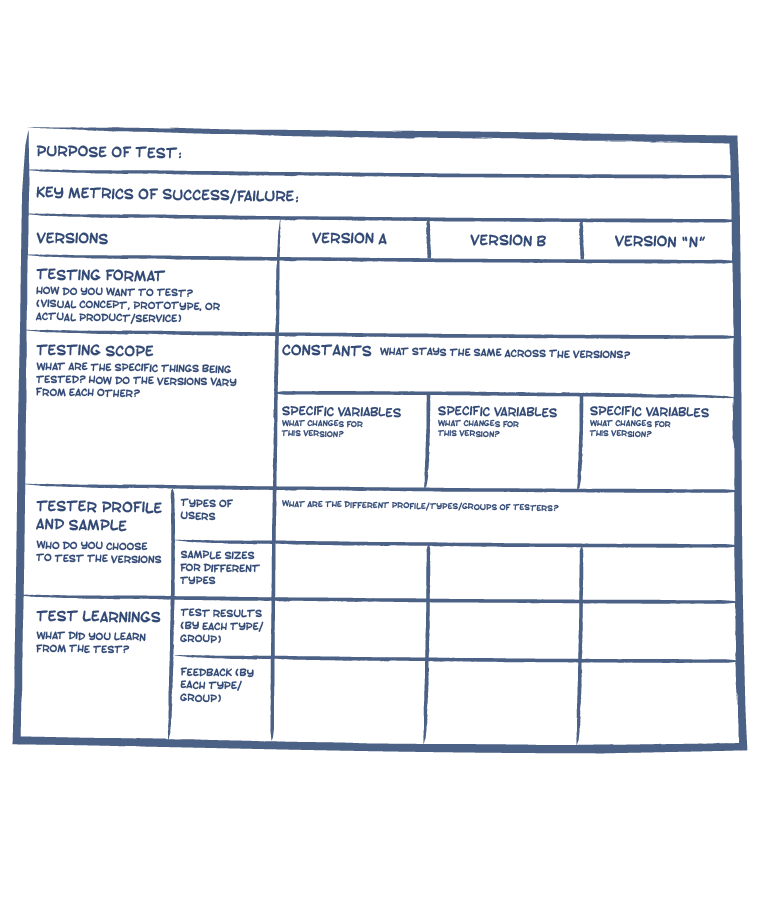

A/B testing, at its most basic, is an experiment to compare two versions of a prototype (product or service) and figure which performs better. A/B/n testing involves testing at least three variations of something. The “n” refers to the number of variations that can be tested. While the term is generally used in the context of web development, the approach is relevant to other testing contexts too.

USE CASES

- Test low-fidelity service or product concepts and prototypes.

- Test high fidelity experienceable prototypes, close to final production.

- Test variations of specific components, journeys, features, etc. for a product or service already in use, especially digital.

LIMITATIONS

It may not be feasible to comprehensively test each aspect of the design. The decision on what is to be tested, how many versions have to be tested, and why lies with the teams.